Overview

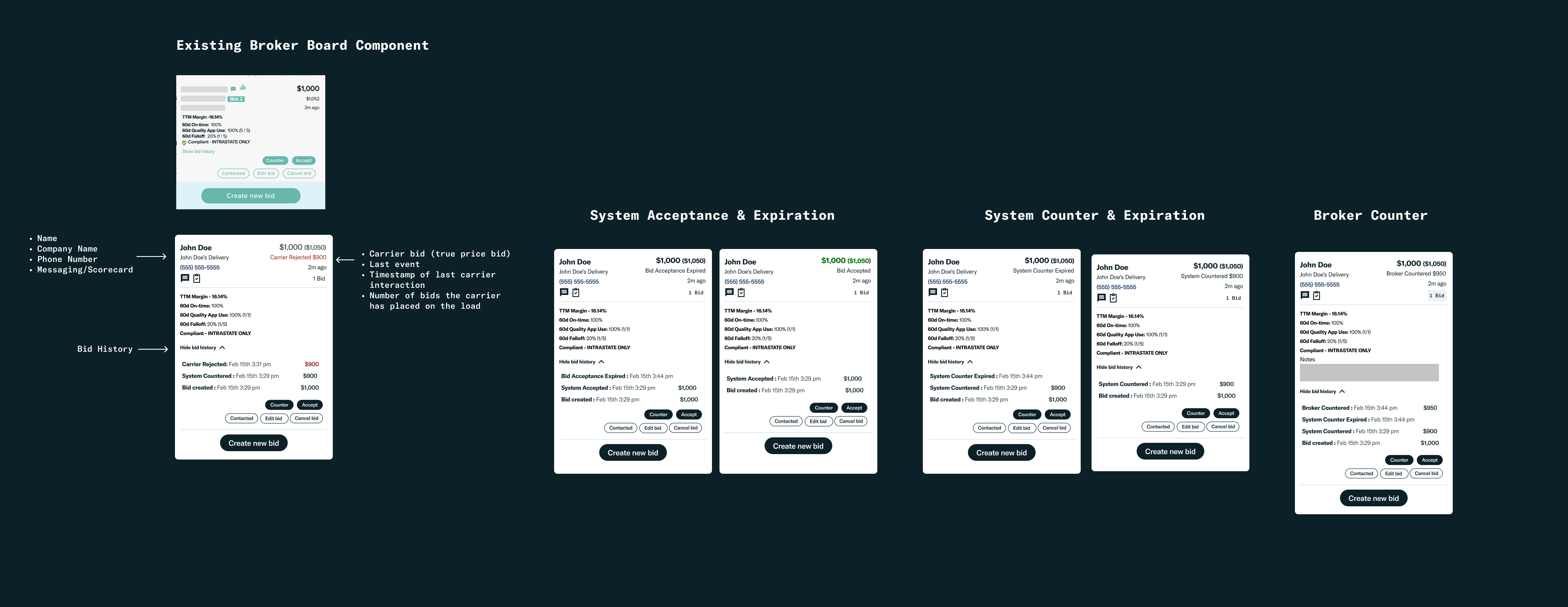

At Convoy, I had the pleasure of working in the carrier marketplace, on projects that directly impacted carriers and how they interacted with Convoy on a day-to-day basis. I worked on many different aspects of the app, but primarily on how carriers found, bid, & booked loads.

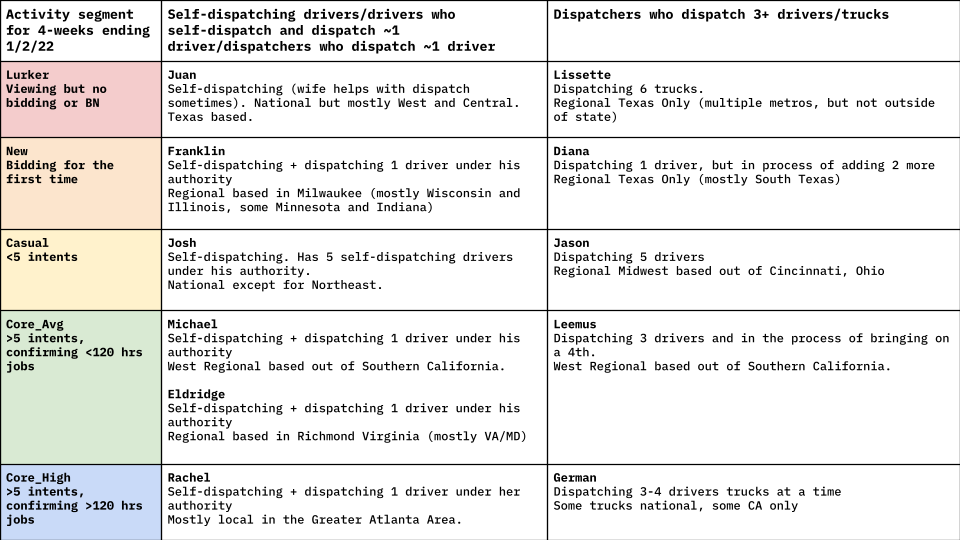

In January of 2022, our UX Researcher, Janell Rothenberg, asked me to join a project called Next Gen auctions.

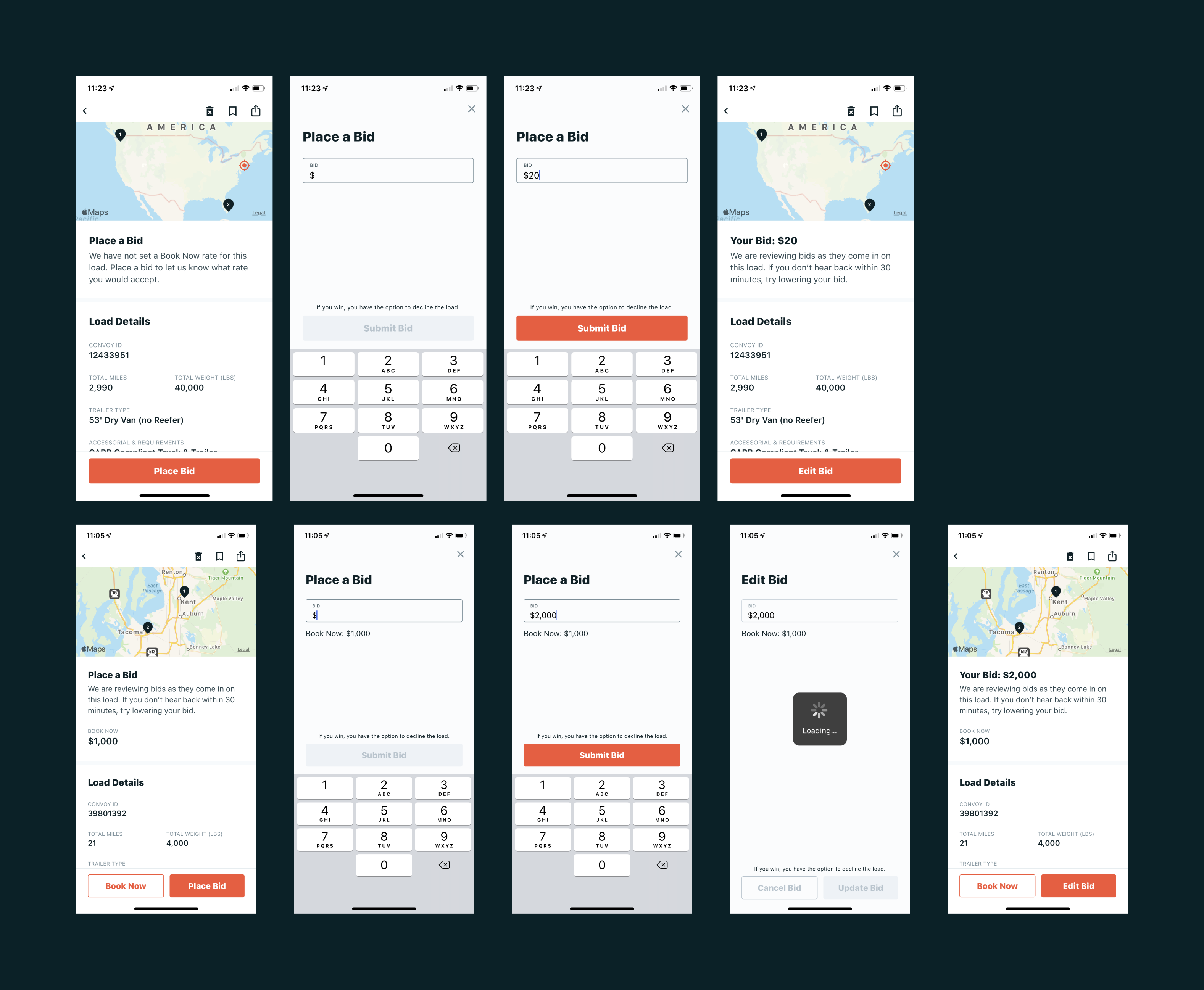

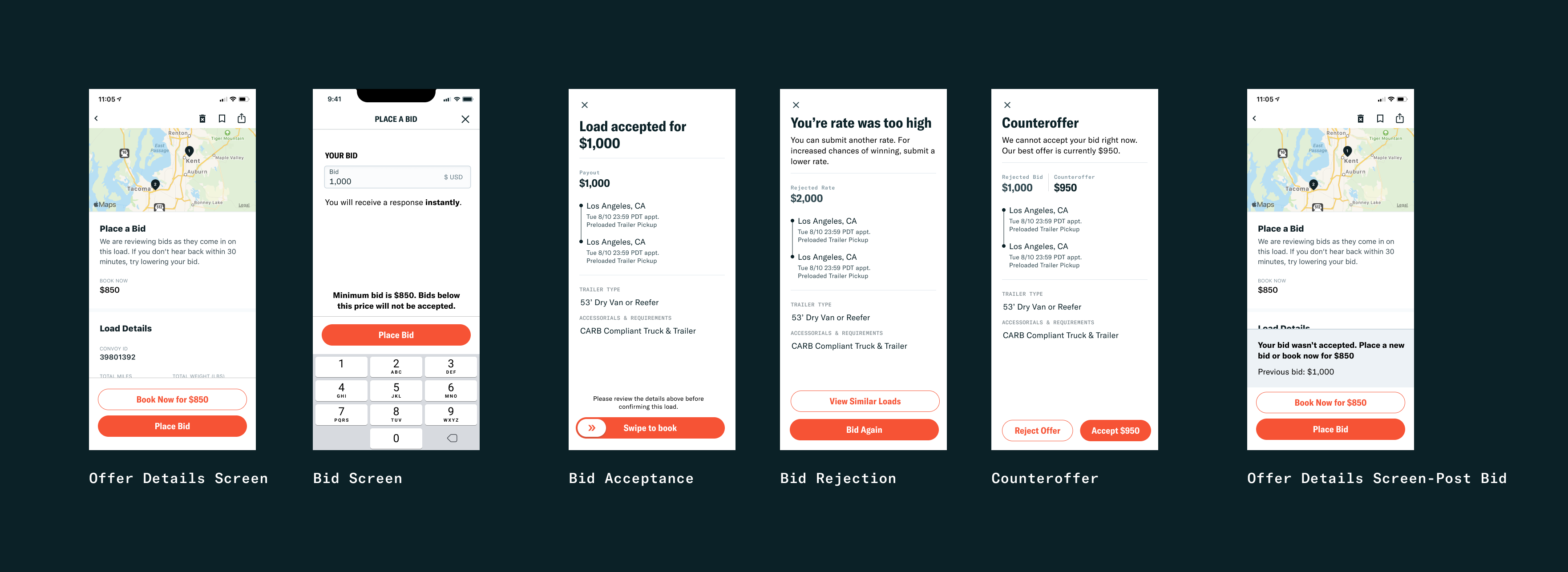

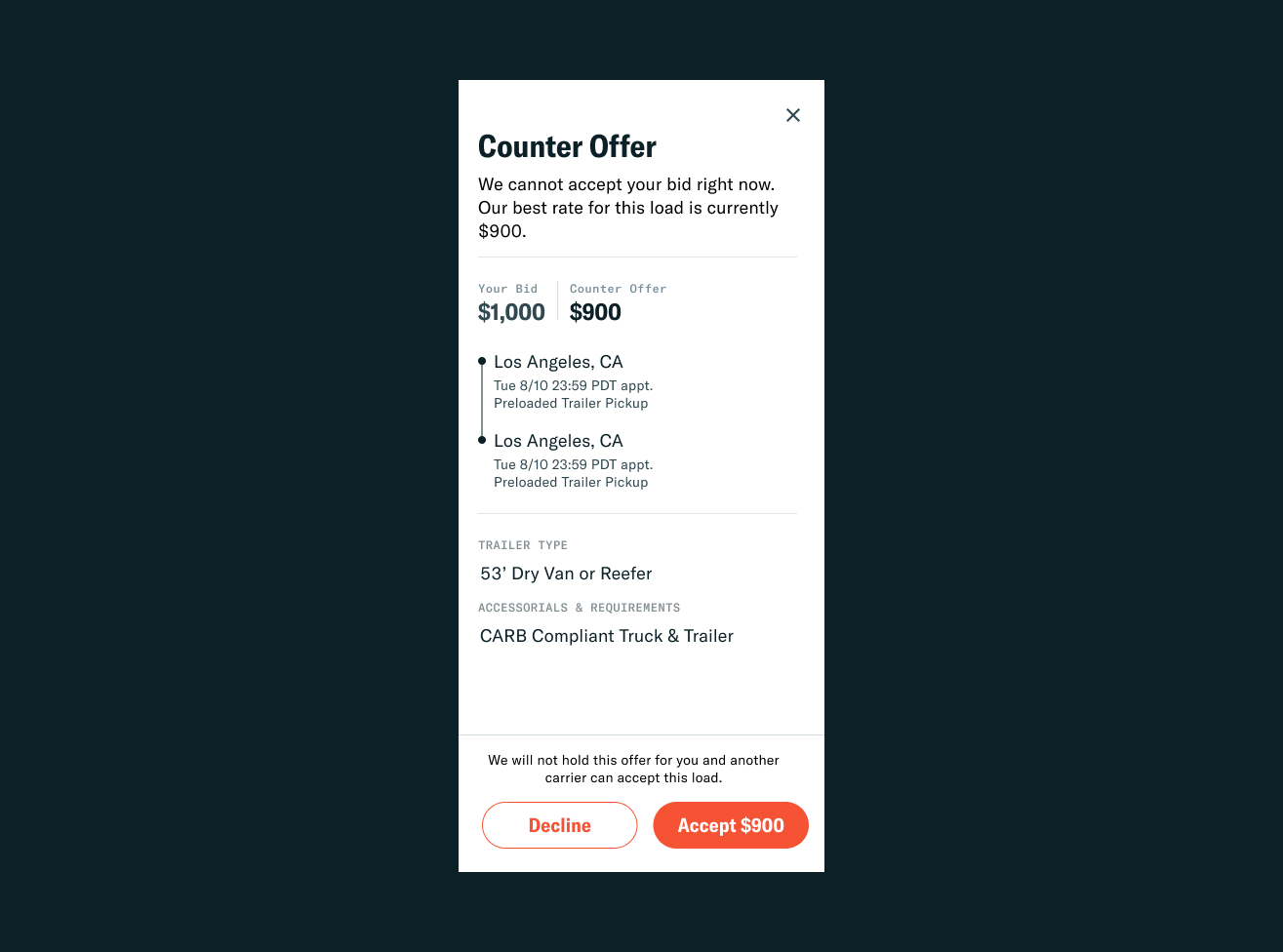

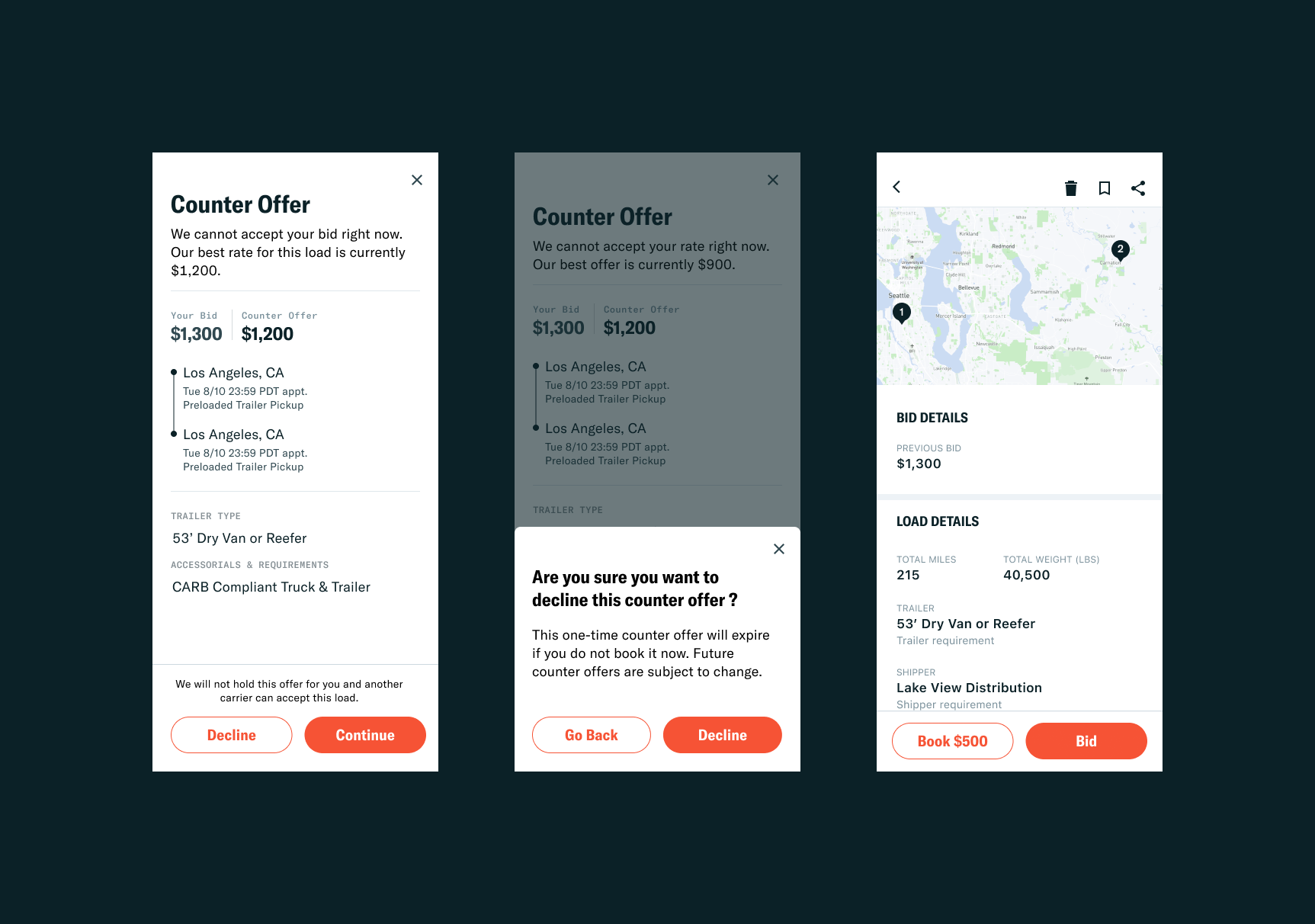

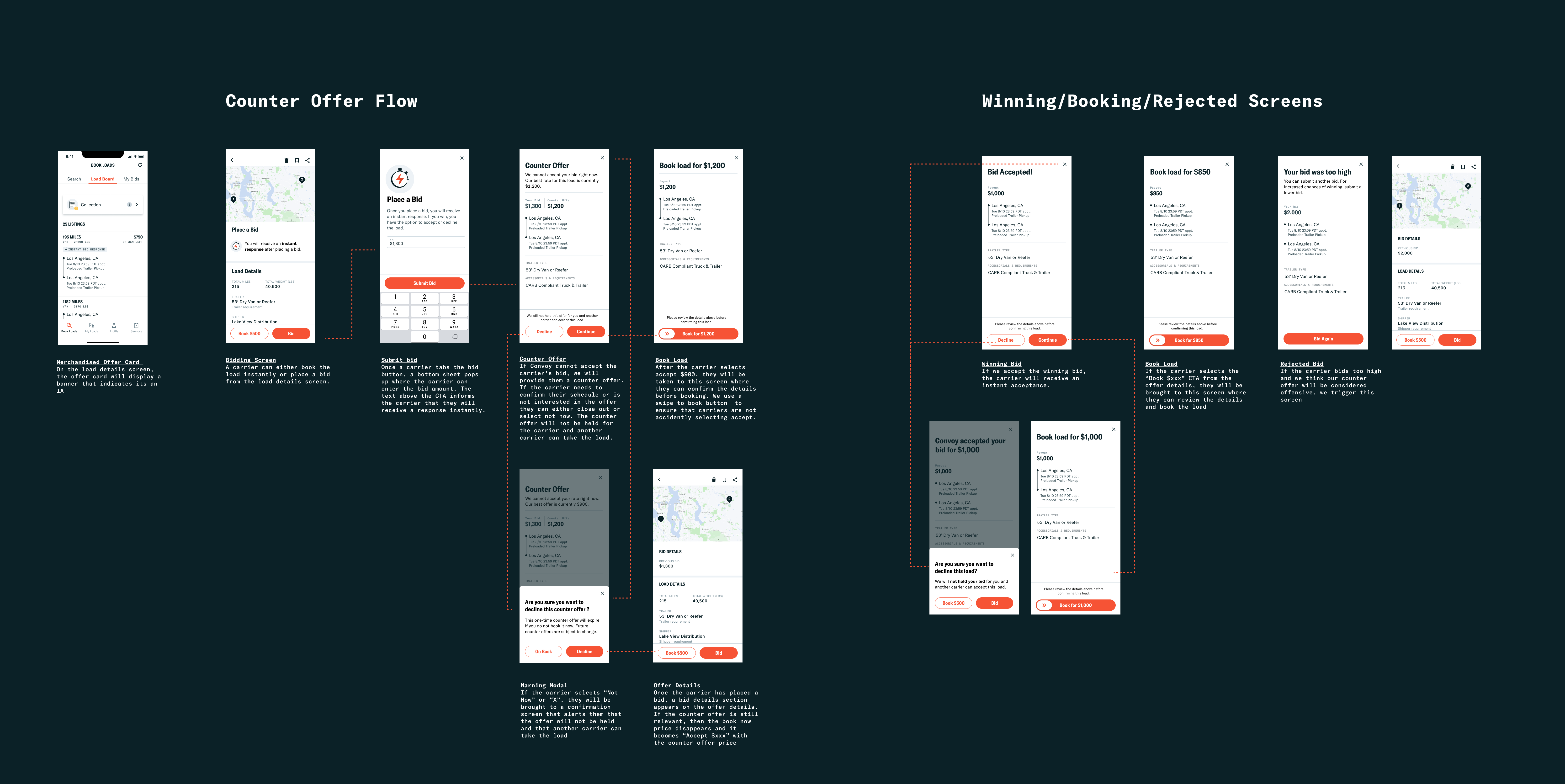

In previous research, Janell identified critical limitations in Convoy’s current bidding and booking experience. The carriers’ number one complaint about bidding was that they felt that Convoy didn’t value their time and they spent too much time waiting for a response back on their bid. For a carrier, waiting for a response means that they aren’t able to plan effectively and thus,everything comes down to the last minute. With the new proposed auction, carriers would receive an immediate response after placing a bid. Once a carrier placed a bid, they would either receive an instant acceptance, instant rejection, or counteroffer. The concept was simple, but I later learned that giving an instant response was no easy feat.

What are the carrier problems this project aims to solve?

1.

Sentiment towards our bidding and booking process impacts whether or not carriers want to do work with Convoy, and negative or mixed sentiment towards this process is common among our largest (and least engaged) segments.

2.

Most carriers who use Convoy have lower preference for finding work with us than our competitors. They also are far less likely to see our bidding and booking processes as better than our competitors’ ways of bidding and booking.

Project Goal

Reduce the amount of time carriers have to wait by providing an immediate response after they place a bid.

My Partners: UX research, data science, product management, engineering, marketing, branding, & support from my fellow design team

Biggest Learning

How to use and weigh qualitative and quantitative data to inform design decisions.

When making design decisions, it's crucial to evaluate the strengths and weaknesses of both qualitative and quantitative data, on the specific design problem at hand. It is important to create a design that is scalable, starting with a basic experience that is based off of initial user research and assumptions from data. This is the base to develop a comprehensive research plan to collect qualitative and quantitative feedback through experimentation.